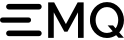

您好,麻烦问下,emqx版本5.7.2,连接数大概700多个,某个节点的负载相比于其他节点过高

连接数在三个节点上均衡吗?如果不均衡可以用重平衡功能:节点疏散与集群负载重平衡 | EMQX文档

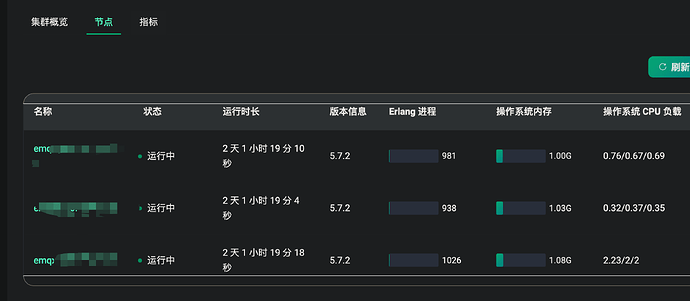

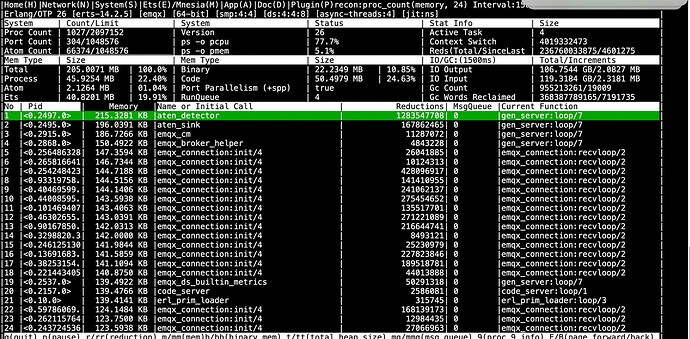

如果差不多,那你需要到负载高的节点上,emqx remote_console 进入 emqx 的 console,然后:

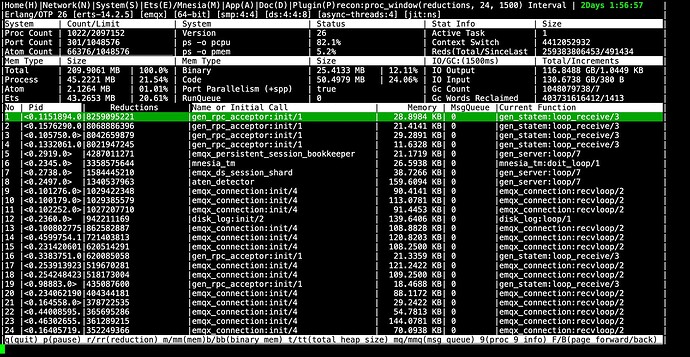

observer_cli:start().

看看当前系统是不是存在什么瓶颈。

再帮忙截两张图片:

- 在这个界面输入 mq 回车按照消息队列排序,截个图

- 再输入 rr 回车再截一张图。

输入 q 回车可以退出这个界面。连续两次 Ctrl + C 可以退出 console。

连接数是均衡的,我们是通过slb轮训打到3台节点上

看起来像是 gen_rpc (节点间消息传输)导致的,现在不太确定是不是个问题,你吧 durable_session / persistent_session 相关的功能关掉试试看。

目前已经上到线上环境了,不能随便重启![]()

![]()

![]()

现在不能确定是什么原因,看起来可能是个问题,需要远程看看才能知道。

远程的话我们这边如何协助您?

微信 18253232330

好的,加您了

On the node that with high CPU usage, we create a trace using redbug:

redbug:start("gen_rpc_acceptor -> return").

We found that the gen_rpc_acceptor received message in a high rate:

% 03:28:52 <0.26366990.5>(dead)

% gen_rpc_acceptor:call_middleman(emqx_ds_replication_layer, do_next_v1, [messages,<<"8">>,

#{1 => 2,2 => 1,

3 =>

#{1 => 2,

2 => [<<"localserver">>,<<"shopId">>,<<"508175243">>],

3 => 1729213770182000,

4 => {4080822714,[<<"508175243">>]},

5 => <<>>}},

100])

% 03:28:52 <0.26370162.5>(dead)

% gen_rpc_acceptor:call_middleman/3 -> {exit,

{call_middleman_result,

{ok,

#{1 => 2,2 => 1,

3 =>

#{1 => 2,

2 =>

[<<"localserver">>,

<<"shopId">>,<<"504232254">>],

3 => 1729208970791000,

4 =>

{4080822714,[<<"504232254">>]},

5 => <<>>}},

[]}}}

% 03:28:52 <0.26366990.5>(dead)

% gen_rpc_acceptor:call_middleman/3 -> {exit,

{call_middleman_result,

{ok,

#{1 => 2,2 => 1,

3 =>

#{1 => 2,

2 =>

[<<"localserver">>,

<<"shopId">>,<<"508175243">>],

3 => 1729213770182000,

4 =>

{4080822714,[<<"508175243">>]},

5 => <<>>}},

[]}}}

% 03:28:52 <0.26370269.5>(dead)

% gen_rpc_acceptor:call_middleman(emqx_ds_replication_layer, do_next_v1, [messages,<<"6">>,

#{1 => 2,2 => 1,

3 =>

#{1 => 2,

2 => [<<"localserver">>,<<"shopId">>,<<"508175243">>],

3 => 1729213770182000,

4 => {4080822714,[<<"508175243">>]},

5 =>

<<243,60,105,186,0,6,36,183,230,83,101,117,95,153,139,251,183,

161,214,80>>}},

100])

% 03:28:52 <0.26370269.5>(dead)

% gen_rpc_acceptor:call_middleman/3 -> {exit,

{call_middleman_result,

{ok,

#{1 => 2,2 => 1,

3 =>

#{1 => 2,

2 =>

[<<"localserver">>,

<<"shopId">>,<<"508175243">>],

3 => 1729213770182000,

4 =>

{4080822714,[<<"508175243">>]},

5 =>

<<243,60,105,186,0,6,36,183,

230,83,101,117,95,153,139,

251,183,161,214,80>>}},

[]}}}

% 03:28:52 <0.26370253.5>(dead)

% gen_rpc_acceptor:call_middleman(emqx_ds_replication_layer, do_next_v1, [messages,<<"10">>,

#{1 => 2,2 => 1,

3 =>

#{1 => 2,

2 => [<<"localserver">>,<<"shopId">>,<<"508175243">>],

3 => 1729213770182000,

4 => {4080822714,[<<"508175243">>]},

5 =>

<<243,60,105,186,0,6,36,183,230,83,101,117,95,153,139,251,183,

169,96,0>>}},

100])

% 03:28:52 <0.26370253.5>(dead)

% gen_rpc_acceptor:call_middleman/3 -> {exit,

{call_middleman_result,

{ok,

#{1 => 2,2 => 1,

3 =>

#{1 => 2,

2 =>

[<<"localserver">>,

<<"shopId">>,<<"508175243">>],

3 => 1729213770182000,

4 =>

{4080822714,[<<"508175243">>]},

5 =>

<<243,60,105,186,0,6,36,183,

230,83,101,117,95,153,139,

251,183,169,96,0>>}},

[]}}}

redbug done, msg_count - 5

v5.7.2(emqx@10.128.17.32)2>

And there’s also a warning log message on one of the node with normal CPU load:

{"time":1729219792582434,"level":"warning","msg":"emqx_persistent_session_ds_replay_inconsistency","clientid":"localserver/clientId/505050102","username":"test","got":"{srs,<<\"10\">>,1,#{1 => 2,2 => <<\"10\">>,3 => #{1 => 2,2 => 1,3 => #{1 => 2,2 => [<<\"localserver\">>,<<\"shopId\">>,<<\"505050102\">>],3 => 1729213264491000,4 => {4080822714,[<<\"505050102\">>]},5 => <<243,60,105,186,0,6,36,183,87,236,171,203,191,61,63,26,229,248,171,40>>}}},#{1 => 2,2 => <<\"10\">>,3 => #{1 => 2,2 => 1,3 => #{1 => 2,2 => [<<\"localserver\">>,<<\"shopId\">>,<<\"505050102\">>],3 => 1729213264491000,4 => {4080822714,[<<\"505050102\">>]},5 => <<243,60,105,186,0,6,36,183,89,198,112,132,242,211,158,118,188,117,20,24>>}}},1,11,0,12,0,false,1}","expected":"{srs,<<\"10\">>,1,#{1 => 2,2 => <<\"10\">>,3 => #{1 => 2,2 => 1,3 => #{1 => 2,2 => [<<\"localserver\">>,<<\"shopId\">>,<<\"505050102\">>],3 => 1729213264491000,4 => {4080822714,[<<\"505050102\">>]},5 => <<243,60,105,186,0,6,36,183,87,236,171,203,191,61,63,26,229,248,171,40>>}}},#{1 => 2,2 => <<\"10\">>,3 => #{1 => 2,2 => 1,3 => #{1 => 2,2 => [<<\"localserver\">>,<<\"shopId\">>,<<\"505050102\">>],3 => 1729213264491000,4 => {4080822714,[<<\"505050102\">>]},5 => <<243,60,105,186,0,6,36,183,89,210,48,221,252,214,168,98,223,164,156,168>>}}},1,11,0,12,0,false,1}","mfa":"{emqx_persistent_session_ds,replay_batch,3}","peername":"39.188.10.240:22121","pid":"<0.1926884.0>","line":651}

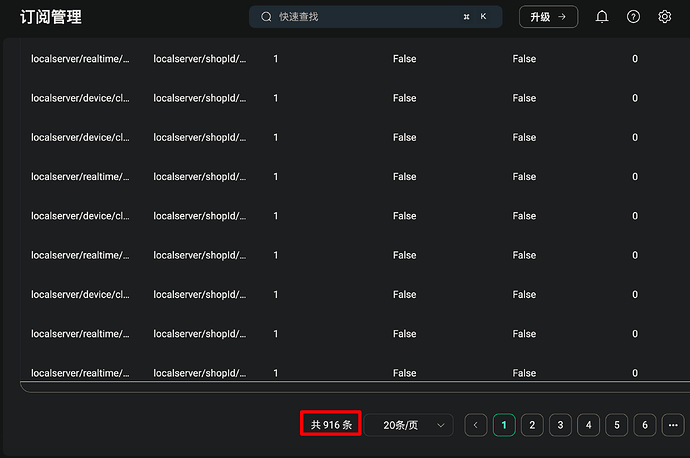

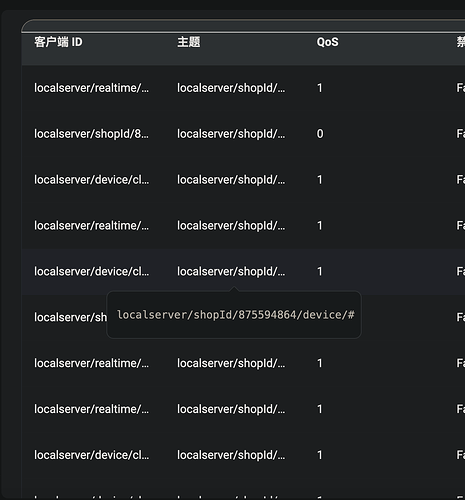

The user is using the durable session feature:

durable_sessions.enable = true

The total message rate is very low:

And they have less than 1K subscribers:

The topic example: