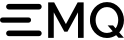

As time goes, the whole memory of the emqx inscreases。

How can i make the process killed ,when “maximum heap size reached”?

2024-01-23 00:33:21.096 [error] Process: <0.8166.8> on node ‘emqx@127.0.0.1’

Context: maximum heap size reached

Max Heap Size: 2097152

Total Heap Size: 2787348

Kill: false

Error Logger: true

GC Info: [{old_heap_block_size,514838},

{heap_block_size,2272494},

{mbuf_size,16},

{recent_size,305321},

{stack_size,122},

{old_heap_size,455223},

{heap_size,832890},

{bin_vheap_size,33306},

{bin_vheap_block_size,46422},

{bin_old_vheap_size,8341},

{bin_old_vheap_block_size,46422}]

Hi, chris. emqx will automatically kill some client processes that exceed the memory limit. We don’t need to kill it manually.

For example, in 5.x, you can refer to this configuration:

i.e:

force_shutdown {

enable = true

max_mailbox_size = 1000

max_heap_size = "32MB"

}

Isn’t version of 4.2.14 not supported ?

I changed all the parameters i could imagine, but it doesn’t worked, until a system oom-killer occurs.

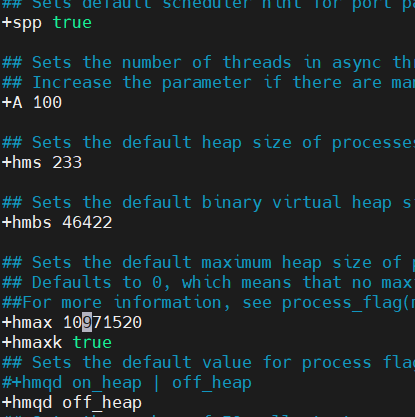

My configuration as follows:

emqx.conf:

zone.external.force_gc_policy = 100|5MB

zone.external.force_shutdown_policy = 300|10MB

zone.external.max_packet_size = 1MB

zone.external.max_mqueue_len = 300

zone.external.session_expiry_interval = 10m

vm.args:

It’s version 5 format. It is equivalent to zone.external.force_shutdown_policy = 1000|10MB

If it still happens to have OOM issues. It might be worth investigating the memory footprint of EMQX’s VM near the time of the crash.

i.e,

- using the

emqx_ctl vm memoryto print the VM memory distribution - using the

emqx_ctl recon proc_count memory 100to print the 100 processes that use the most memory.

BTW, 4.2 is beyond our maintenance cycle, you can try upgrading to 5.x or 4.4 in the future.

emqx_ctl vm memory

memory/total : 6452771512

memory/processes : 3279384232

memory/processes_used : 3279378656

memory/system : 3173387280

memory/atom : 1443993

memory/atom_used : 1431260

memory/binary : 3108468544

memory/code : 30387746

memory/ets : 7269568

emqx_ctl recon proc_count memory 10

[{<0.6096.0>,2091810560,

[{current_function,{gen,do_call,4}},{initial_call,{proc_lib,init_p,5}}]},

{<0.12448.13>,1132481760,

[{current_function,{gen,do_call,4}},{initial_call,{proc_lib,init_p,5}}]},

{<0.17894.21>,13821256,

[{current_function,{emqx_connection,recvloop,2}},

{initial_call,{proc_lib,init_p,5}}]},

{<0.935.22>,603752,

[{current_function,{emqx_connection,recvloop,2}},

{initial_call,{proc_lib,init_p,5}}]},

{<0.1288.0>,461412,

[application_controller,

{current_function,{gen_server,loop,7}},

{initial_call,{erlang,apply,2}}]},

{<0.923.22>,426888,

[{current_function,{emqx_connection,recvloop,2}},

{initial_call,{proc_lib,init_p,5}}]},

{<0.918.22>,426888,

[{current_function,{emqx_connection,recvloop,2}},

{initial_call,{proc_lib,init_p,5}}]},

{<0.913.22>,426888,

[{current_function,{emqx_connection,recvloop,2}},

{initial_call,{proc_lib,init_p,5}}]},

{<0.1402.0>,372648,

[ranch_sup,

{current_function,{gen_server,loop,7}},

{initial_call,{proc_lib,init_p,5}}]},

{<0.2418.0>,286296,

[{current_function,{emqx_connection,recvloop,2}},

{initial_call,{proc_lib,init_p,5}}]}]

emqx remote_console information on the remote server.

========================================================================================

'emqx@127.0.0.1' 01:01:02

Load: cpu 1 Memory: total 5515460 binary 2269778

procs 381 processes 3072341 code 29676

runq 0 atom 1410 ets 7194

Pid Name or Initial Func Time Reds Memory MsgQ Current Function

----------------------------------------------------------------------------------------

<8867.29600.2> emqx_connection:init '-' 42******** 2246890 gen:do_call/4

<8867.19863.2> emqx_connection:init '-' 42******** 2320382 gen:do_call/4

<8867.28846.11>emqx_connection:init '-' 753 4723508 0 emqx_connection:recv

<8867.28855.11>emqx_connection:init '-' 753 4722476 0 emqx_connection:recv

<8867.28890.11>emqx_connection:init '-' 753 4721388 0 emqx_connection:recv

<8867.28845.11>emqx_connection:init '-' 753 4720788 0 emqx_connection:recv

<8867.28851.11>emqx_connection:init '-' 712 2919140 0 emqx_connection:recv

<8867.28840.11>emqx_connection:init '-' 753 1948636 0 emqx_connection:recv

<8867.28959.11>emqx_connection:init '-' 891 1804500 0 emqx_connection:recv

<8867.29000.11>emqx_connection:init '-' 218185 1804456 0 emqx_connection:recv

<8867.28946.11>emqx_connection:init '-' 753 1804444 0 emqx_connection:recv

<8867.28862.11>emqx_connection:init '-' 2343 1803900 0 emqx_connection:recv

<8867.1405.0> ranch_sup '-' 1 602176 0 gen_server:loop/7

<8867.28966.11>etop_txt:init/1 '-' 19209 426700 0 etop:update/1

<8867.1288.0> application_controll '-' 1 319556 0 gen_server:loop/7

<8867.28942.11>erlang:apply/2 '-' 1 264164 0 shell:get_command1/5

<8867.1621.0> ranch_conns_sup:init '-' 42 236152 0 ranch_conns_sup:loop

<8867.1599.0> ranch_conns_sup:init '-' 2 230544 0 ranch_conns_sup:loop

<8867.1954.0> emqx_connection:init '-' 3434 143280 0 emqx_connection:recv

<8867.1807.0> emqx_connection:init '-' 3574 143224 0 emqx_connection:recv

========================================================================================one process information:(emqx@127.0.0.1)3> process_info(pid(0,29600,2), current_stacktrace).

{current_stacktrace,[{gen,do_call,4,

[{file,"gen.erl"},{line,167}]},

{gen_server,call,3,[{file,"gen_server.erl"},{line,219}]},

{emqx_cm,takeover_session,2,

[{file,"emqx_cm.erl"},{line,267}]},

{emqx_cm,'-open_session/3-fun-1-',5,

[{file,"emqx_cm.erl"},{line,222}]},

{emqx_cm_locker,trans,3,

[{file,"emqx_cm_locker.erl"},{line,46}]},

{emqx_channel,process_connect,2,

[{file,"emqx_channel.erl"},{line,434}]},

{emqx_connection,with_channel,3,

[{file,"emqx_connection.erl"},{line,553}]},

{emqx_connection,process_msg,3,

[{file,"emqx_connection.erl"},{line,293}]}]}

rp(erlang:process_info(pid(0,19863,2), backtrace)).

{backtrace,<<"Program counter: 0x00007fe26a53d860 (gen:do_call/4 + 3168)\n

CP: 0x0000000000000000 (invalid)\n\n

0x00007fde7c33fa28 Return addr 0x00007fe26a551908 (gen_server:call/3 + 128)\n

y(0) infinity\n

y(1) []\n

y(2) #Ref<0.2499957634.4089446403.17060>\n

y(3) <0.19863.2>\n\n

0x00007fde7c33fa50 Return addr 0x00007fe2681a03d0 (emqx_cm:takeover_session/2 + 312)\n

y(0) infinity\n

y(1) {takeover,begin}\n

y(2) <0.19863.2>\n

y(3) Catch 0x00007fe26a551908 (gen_server:call/3 + 128)\n\n

0x00007fde7c33fa78 Return addr 0x00007fe2681a28192 (emqx_cm:'-open_session/3-fun-1-'/5 + 96)\n

y(0) emqx_connection\n

y(1) <0.19863.2>\n\n

0x00007fde7c33fa90 Return addr 0x00007fe26819a978 (emqx_cm_locker:trans/3 + 360)\n

y(0) []\n

y(1) []\n

y(2) <0.19863.2>\n

y(3) <<\"192.168.30.16_1701152524553\">>\n

y(4) #{clean_start=>false,clientid=><<\"192.168.30.16_1701152524553\">>,conn_mod=>emqx_connection,conn_props=>#{},connected_at=>1701153199092,disconnected_at=>1701153199091,expiry_interval=>71680,keepalive=>60,peercert=>nossl,peername=>{{192,168,30,43},57440},proto_name=><<\"MQTT\">>,proto_ver=>4,receive_maximum=>32,sockname=>{{192,168,30,16},1883},socktype=>tcp,username=><<\"admin\">>}\n

y(5) #{anonymous=>true,auth_result=>success,clientid=><<\"192.168.30.16_1701152524553\">>,is_bridge=>false,is_superuser=>false,mountpoint=>undefined,peerhost=>{192,168,30,43},protocol=>mqtt,sockport=>1883,username=><<\"admin\">>,zone=>external}\n\n

0x00007fde7c33fac8 Return addr 0x00007fe26818192b8 (emqx_channel:process_connect/2 + 168)\n

y(0) []\n

y(1) []\n

y(2) <<\"192.168.30.16_1701152524553\">>\n

y(3) Catch 0x00007fe26819a9b8 (emqx_cm_locker:trans/3 + 424)\n\n

0x00007fde7c33faf0 Return addr 0x00007fe268197608 (emqx_connection:with_channel/3 + 232)\n

y(0) []\n

y(1) []\n

y(2) {channel,#{clean_start=>false,clientid=><<\"192.168.30.16_1701152524553\">>,conn_mod=>emqx_connection,conn_props=>#{},connected_at=>1701153199092,disconnected_at=>1701153199091,expiry_interval=>71680,keepalive=>60,peercert=>nossl,peername=>{{192,168,30,43},57440},proto_name=><<\"MQTT\">>,proto_ver=>4,receive_maximum=>32,sockname=>{{192,168,30,16},1883},socktype=>tcp,username=><<\"admin\">>},#{anonymous=>true,auth_result=>success,clientid=><<\"192.168.30.16_1701152524553\">>,is_bridge=>false,is_superuser=>false,mountpoint=>undefined,peerhost=>{192,168,30,43},protocol=>mqtt,sockport=>1883,username=><<\"admin\">>,zone=>external},{session,#{<<\"product/direct/data/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/direct/info/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/direct/status/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/gateway/data/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/gateway/status/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/gw/info/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/gw_chlidev/data/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/gw_chlidev/status/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/order_return/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}}},0,false,{inflight,32,{0,nil}},{mqueue,true,1916800,0,0,none,infinity,{queue,[],[],0}},1,30000,#{},19168,300000,1701152524859},{keepalive,45000,0,0},undefined,#{inbound=>#{},outbound=>#{}},undefined,#{},undefined,#{alive_timer=>#Ref<0.2499957634.4089446403.15885>,expire_timer=>#Ref<0.2499957634.4089446403.17052>},connected,false,false,[]}\ny(3) #{}\n\n0x00007fde7c33fb18 Return addr 0x00007fe2681948b0 (emqx_connection:process_msg/3 + 136)\n

y(0) []\n

y(1) {state,esockd_transport,#Port<0.17354>,{{192,168,30,43},57440},{{192,168,30,16},1883},closed,19168,undefined,undefined,{none,#{max_size=>19248576,strict_mode=>false,version=>4}},#Fun<emqx_frame.4.19219284926>,{channel,#{clean_start=>false,clientid=><<\"192.168.30.16_1701152524553\">>,conn_mod=>emqx_connection,conn_props=>#{},connected_at=>1701153199074,disconnected_at=>1701153199091,expiry_interval=>71680,keepalive=>60,peercert=>nossl,peername=>{{192,168,30,43},57440},proto_name=><<\"MQTT\">>,proto_ver=>4,receive_maximum=>32,sockname=>{{192,168,30,16},1883},socktype=>tcp,username=><<\"admin\">>},#{anonymous=>true,auth_result=>success,clientid=><<\"192.168.30.16_1701152524553\">>,is_bridge=>false,is_superuser=>false,mountpoint=>undefined,peerhost=>{192,168,30,43},protocol=>mqtt,sockport=>1883,username=><<\"admin\">>,zone=>external},{session,#{<<\"product/direct/data/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/direct/info/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/direct/status/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/gateway/data/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/gateway/status/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/gw/info/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/gw_chlidev/data/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/gw_chlidev/status/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/order_return/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}}},0,false,{inflight,32,{0,nil}},{mqueue,true,1916800,0,0,none,infinity,{queue,[],[],0}},1,30000,#{},19168,300000,1701152524859},{keepalive,45000,0,0},undefined,#{inbound=>#{},outbound=>#{}},undefined,#{},undefined,#{alive_timer=>#Ref<0.2499957634.4089446403.15885>,expire_timer=>#Ref<0.2499957634.4089446403.17052>},disconnected,false,false,[]},{gc_state,#{cnt=>{16000,16000},oct=>{16777216,16777216}}},#Ref<0.2499957634.4089708546.12231>,15000,undefined}\n

y(2) []\n\n0x00007fde7c33fb38 Return addr 0x00007fe26a4ddee8 (proc_lib:wake_up/3 + 1168)\n

y(0) []\n

y(1) {state,esockd_transport,#Port<0.17354>,{{192,168,30,43},57440},{{192,168,30,16},1883},closed,19168,undefined,undefined,{none,#{max_size=>19248576,strict_mode=>false,version=>4}},#Fun<emqx_frame.4.19219284926>,{channel,#{clean_start=>false,clientid=><<\"192.168.30.16_1701152524553\">>,conn_mod=>emqx_connection,conn_props=>#{},connected_at=>1701153199074,disconnected_at=>1701153199091,expiry_interval=>71680,keepalive=>60,peercert=>nossl,peername=>{{192,168,30,43},57440},proto_name=><<\"MQTT\">>,proto_ver=>4,receive_maximum=>32,sockname=>{{192,168,30,16},1883},socktype=>tcp,username=><<\"admin\">>},#{anonymous=>true,auth_result=>success,clientid=><<\"192.168.30.16_1701152524553\">>,is_bridge=>false,is_superuser=>false,mountpoint=>undefined,peerhost=>{192,168,30,43},protocol=>mqtt,sockport=>1883,username=><<\"admin\">>,zone=>external},{session,#{<<\"product/direct/data/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/direct/info/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/direct/status/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/gateway/data/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/gateway/status/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/gw/info/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/gw_chlidev/data/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/gw_chlidev/status/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}},<<\"product/order_return/#\">>=>#{nl=>0,qos=>2,rap=>0,rh=>0,sub_props=>#{}}},0,false,{inflight,32,{0,nil}},{mqueue,true,1916800,0,0,none,infinity,{queue,[],[],0}},1,30000,#{},19168,300000,1701152524859},{keepalive,45000,0,0},undefined,#{inbound=>#{},outbound=>#{}},undefined,#{},undefined,#{alive_timer=>#Ref<0.2499957634.4089446403.15885>,expire_timer=>#Ref<0.2499957634.4089446403.17052>},disconnected,false,false,[]},{gc_state,#{cnt=>{16000,16000},oct=>{16777216,16777216}}},#Ref<0.2499957634.4089708546.12231>,15000,undefined}\n

y(2) <0.1652.0>\n

y(3) Catch 0x00007fe2681948b0 (emqx_connection:process_msg/3 + 136)\n\n0x00007fde7c33fb60 Return addr 0x000055a7bdfc8248 (<terminate process normally>)\n

y(0) []\n

y(1) []\n

y(2) Catch 0x00007fe26a4ddf08 (proc_lib:wake_up/3 + 152)\n">>}

Is there anything wrong?

<8867.29600.2>,<8867.19863.2>

These two processes seem to be stuck with too many messages.

They keep waiting for taken over session and don’t have a chance to check the OOM watermark you set, so the system directly OOM. How many devices are like this? You can try to manually kill these two processes and see if they get stuck again after reconnecting.

erlang:exit(<8867.29600.2>,kill).

No more than 100 devices with 5 seconds one message per device. The memory goes down normal after i killed the two processes.

So What can i do, that the two processes can have a chance to check the OOM watermark and make sure the broker don’t go down when some one using it ?

Thank u very much !

There’s no other better way to do it on your version. You can only check and then manually kill those processes.

Upgrade to the latest version 4.4.23 or 5.5.0 ASAP.

If you have a limited number of devices, it should be straightforward to upgrade. The new version has resolved similar stuck bugs.

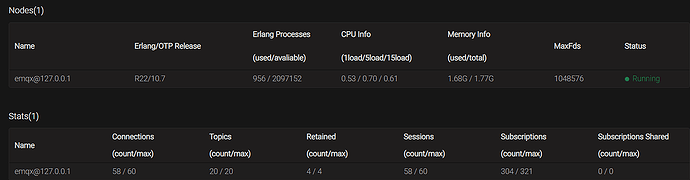

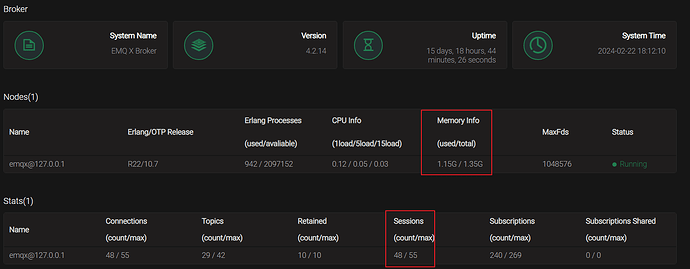

where is the memory info in the dashboard of the new version of 5.4.1,i cann’t find it?

Thank u very much for the past replies.

This is the memory of the os, not the memory of Erlang Node.