[error] crasher: initial call: mria_rlog_server:init/1, pid: <0.2953.0>, registered_name: ‘$mria_meta_shard’, error: {{badmatch,{error,{node_not_running,‘emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local’}}},[{mria_rlog_server,process_schema,1,[{file,“mria_rlog_server.erl”},{line,233}]},{mria_rlog_server,handle_continue,2,[{file,“mria_rlog_server.erl”},{line,127}]},{gen_server,try_dispatch,4,[{file,“gen_server.erl”},{line,1123}]},{gen_server,loop,7,[{file,“gen_server.erl”},{line,865}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,240}]}]}, ancestors: [<0.2952.0>,mria_shards_sup,mria_rlog_sup,mria_sup,<0.2155.0>], message_queue_len: 0, messages: , links: [<0.2952.0>], dictionary: [{‘$logger_metadata$’,#{domain => [mria,rlog,server],shard => ‘$mria_meta_shard’}}], trap_exit: true, status: running, heap_size: 4185, stack_size: 28, reductions: 6681; neighbours:

2023-10-23T05:59:19.852038+00:00 [error] Supervisor: {<0.2952.0>,mria_core_shard_sup}. Context: child_terminated. Reason: {{badmatch,{error,{node_not_running,‘emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local’}}},[{mria_rlog_server,process_schema,1,[{file,“mria_rlog_server.erl”},{line,233}]},{mria_rlog_server,handle_continue,2,[{file,“mria_rlog_server.erl”},{line,127}]},{gen_server,try_dispatch,4,[{file,“gen_server.erl”},{line,1123}]},{gen_server,loop,7,[{file,“gen_server.erl”},{line,865}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,240}]}]}. Offender: id=mria_rlog_server,pid=<0.2953.0>.

2023-10-23T05:59:19.852205+00:00 [error] Supervisor: {<0.2952.0>,mria_core_shard_sup}. Context: shutdown. Reason: reached_max_restart_intensity. Offender: id=mria_rlog_server,pid=<0.2953.0>.

2023-10-23T05:59:19.852329+00:00 [error] Supervisor: {local,mria_shards_sup}. Context: child_terminated. Reason: shutdown. Offender: id=‘$mria_meta_shard’,pid=<0.2952.0>.

2023-10-23T05:59:19.852485+00:00 [error] Generic server ‘$mria_meta_shard’ terminating. Reason: {{badmatch,{error,{node_not_running,‘emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local’}}},[{mria_rlog_server,process_schema,1,[{file,“mria_rlog_server.erl”},{line,233}]},{mria_rlog_server,handle_continue,2,[{file,“mria_rlog_server.erl”},{line,127}]},{gen_server,try_dispatch,4,[{file,“gen_server.erl”},{line,1123}]},{gen_server,loop,7,[{file,“gen_server.erl”},{line,865}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,240}]}]}. Last message: {continue,post_init}. State: {<0.2955.0>,‘$mria_meta_shard’}.

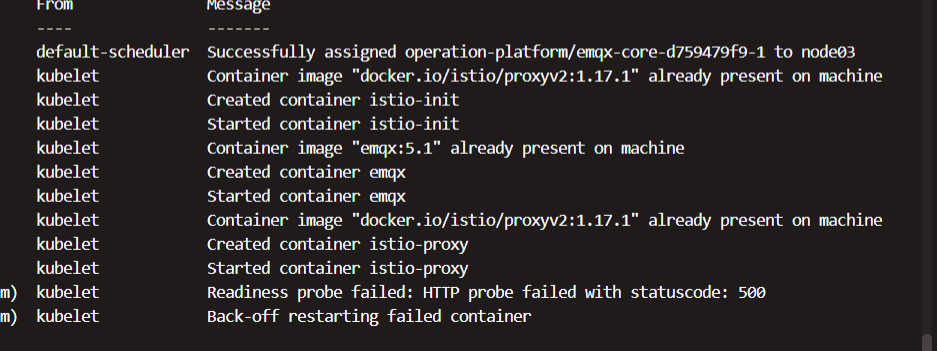

看报错应该是有个节点emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local 没启动成功。有具体的重现步骤么,还有emqx的版本信息。

这个只能看出emqx没有启动成功。

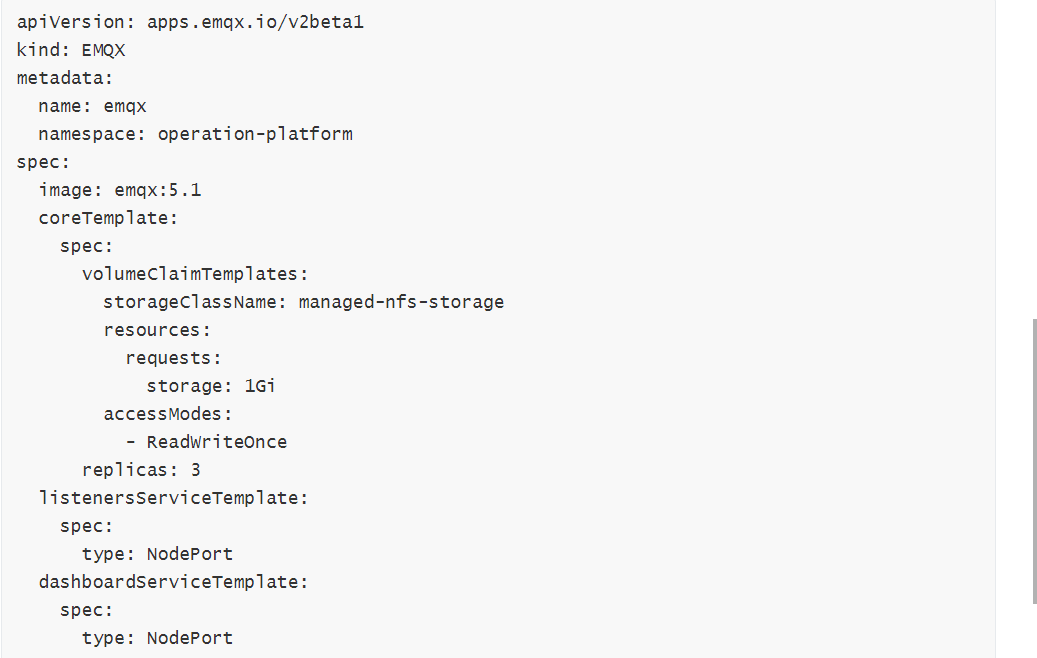

有完整的yaml 部署文件么?还是说你是通过operatro https://github.com/emqx/emqx-operator/blob/main/docs/en_US/getting-started/getting-started.md 部署的?

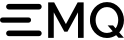

Hi @hui 可以提供一下 Pod 的详情和 Log 么?你可以用下面的命令获取:

kubectl get pods $podName -o json

kubectl logs -p $podName

2023-10-23T06:45:19.446348+00:00 [error] Supervisor: {local,mria_shards_sup}. Context: child_terminated. Reason: shutdown. Offender: id=‘$mria_meta_shard’,pid=<0.3171.0>.

2023-10-23T06:45:19.446447+00:00 [error] Generic server ‘$mria_meta_shard’ terminating. Reason: {{badmatch,{error,{node_not_running,‘emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local’}}},[{mria_rlog_server,process_schema,1,[{file,“mria_rlog_server.erl”},{line,233}]},{mria_rlog_server,handle_continue,2,[{file,“mria_rlog_server.erl”},{line,127}]},{gen_server,try_dispatch,4,[{file,“gen_server.erl”},{line,1123}]},{gen_server,loop,7,[{file,“gen_server.erl”},{line,865}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,240}]}]}. Last message: {continue,post_init}. State: {<0.3174.0>,‘$mria_meta_shard’}.

2023-10-23T06:45:19.446592+00:00 [error] crasher: initial call: mria_rlog_server:init/1, pid: <0.3175.0>, registered_name: ‘$mria_meta_shard’, error: {{badmatch,{error,{node_not_running,‘emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local’}}},[{mria_rlog_server,process_schema,1,[{file,“mria_rlog_server.erl”},{line,233}]},{mria_rlog_server,handle_continue,2,[{file,“mria_rlog_server.erl”},{line,127}]},{gen_server,try_dispatch,4,[{file,“gen_server.erl”},{line,1123}]},{gen_server,loop,7,[{file,“gen_server.erl”},{line,865}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,240}]}]}, ancestors: [<0.3174.0>,mria_shards_sup,mria_rlog_sup,mria_sup,<0.2155.0>], message_queue_len: 0, messages: , links: [<0.3174.0>], dictionary: [{‘$logger_metadata$’,#{domain => [mria,rlog,server],shard => ‘$mria_meta_shard’}}], trap_exit: true, status: running, heap_size: 4185, stack_size: 28, reductions: 6680; neighbours:

2023-10-23T06:45:19.446833+00:00 [error] Supervisor: {<0.3174.0>,mria_core_shard_sup}. Context: child_terminated. Reason: {{badmatch,{error,{node_not_running,‘emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local’}}},[{mria_rlog_server,process_schema,1,[{file,“mria_rlog_server.erl”},{line,233}]},{mria_rlog_server,handle_continue,2,[{file,“mria_rlog_server.erl”},{line,127}]},{gen_server,try_dispatch,4,[{file,“gen_server.erl”},{line,1123}]},{gen_server,loop,7,[{file,“gen_server.erl”},{line,865}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,240}]}]}. Offender: id=mria_rlog_server,pid=<0.3175.0>.

2023-10-23T06:45:19.446955+00:00 [error] Supervisor: {<0.3174.0>,mria_core_shard_sup}. Context: shutdown. Reason: reached_max_restart_intensity. Offender: id=mria_rlog_server,pid=<0.3175.0>.

2023-10-23T06:45:19.447029+00:00 [error] Supervisor: {local,mria_shards_sup}. Context: child_terminated. Reason: shutdown. Offender: id=‘$mria_meta_shard’,pid=<0.3174.0>.

2023-10-23T06:45:19.447283+00:00 [error] Generic server ‘$mria_meta_shard’ terminating. Reason: {{badmatch,{error,{node_not_running,‘emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local’}}},[{mria_rlog_server,process_schema,1,[{file,“mria_rlog_server.erl”},{line,233}]},{mria_rlog_server,handle_continue,2,[{file,“mria_rlog_server.erl”},{line,127}]},{gen_server,try_dispatch,4,[{file,“gen_server.erl”},{line,1123}]},{gen_server,loop,7,[{file,“gen_server.erl”},{line,865}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,240}]}]}. Last message: {continue,post_init}. State: {<0.3177.0>,‘$mria_meta_shard’}.

2023-10-23T06:45:19.447502+00:00 [error] crasher: initial call: mria_rlog_server:init/1, pid: <0.3178.0>, registered_name: ‘$mria_meta_shard’, error: {{badmatch,{error,{node_not_running,‘emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local’}}},[{mria_rlog_server,process_schema,1,[{file,“mria_rlog_server.erl”},{line,233}]},{mria_rlog_server,handle_continue,2,[{file,“mria_rlog_server.erl”},{line,127}]},{gen_server,try_dispatch,4,[{file,“gen_server.erl”},{line,1123}]},{gen_server,loop,7,[{file,“gen_server.erl”},{line,865}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,240}]}]}, ancestors: [<0.3177.0>,mria_shards_sup,mria_rlog_sup,mria_sup,<0.2155.0>], message_queue_len: 0, messages: , links: [<0.3177.0>], dictionary: [{‘$logger_metadata$’,#{domain => [mria,rlog,server],shard => ‘$mria_meta_shard’}}], trap_exit: true, status: running, heap_size: 4185, stack_size: 28, reductions: 6680; neighbours:

2023-10-23T06:45:19.447945+00:00 [error] Supervisor: {<0.3177.0>,mria_core_shard_sup}. Context: child_terminated. Reason: {{badmatch,{error,{node_not_running,‘emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local’}}},[{mria_rlog_server,process_schema,1,[{file,“mria_rlog_server.erl”},{line,233}]},{mria_rlog_server,handle_continue,2,[{file,“mria_rlog_server.erl”},{line,127}]},{gen_server,try_dispatch,4,[{file,“gen_server.erl”},{line,1123}]},{gen_server,loop,7,[{file,“gen_server.erl”},{line,865}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,240}]}]}. Offender: id=mria_rlog_server,pid=<0.3178.0>.

2023-10-23T06:45:19.448069+00:00 [error] Supervisor: {<0.3177.0>,mria_core_shard_sup}. Context: shutdown. Reason: reached_max_restart_intensity. Offender: id=mria_rlog_server,pid=<0.3178.0>.

2023-10-23T06:45:19.448155+00:00 [error] Supervisor: {local,mria_shards_sup}. Context: child_terminated. Reason: shutdown. Offender: id=‘$mria_meta_shard’,pid=<0.3177.0>.

2023-10-23T06:45:19.448231+00:00 [error] Generic server ‘$mria_meta_shard’ terminating. Reason: {{badmatch,{error,{node_not_running,‘emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local’}}},[{mria_rlog_server,process_schema,1,[{file,“mria_rlog_server.erl”},{line,233}]},{mria_rlog_server,handle_continue,2,[{file,“mria_rlog_server.erl”},{line,127}]},{gen_server,try_dispatch,4,[{file,“gen_server.erl”},{line,1123}]},{gen_server,loop,7,[{file,“gen_server.erl”},{line,865}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,240}]}]}. Last message: {continue,post_init}. State: {<0.3180.0>,‘$mria_meta_shard’}.

2023-10-23T06:45:19.448352+00:00 [error] crasher: initial call: mria_rlog_server:init/1, pid: <0.3181.0>, registered_name: ‘$mria_meta_shard’, error: {{badmatch,{error,{node_not_running,‘emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local’}}},[{mria_rlog_server,process_schema,1,[{file,“mria_rlog_server.erl”},{line,233}]},{mria_rlog_server,handle_continue,2,[{file,“mria_rlog_server.erl”},{line,127}]},{gen_server,try_dispatch,4,[{file,“gen_server.erl”},{line,1123}]},{gen_server,loop,7,[{file,“gen_server.erl”},{line,865}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,240}]}]}, ancestors: [<0.3180.0>,mria_shards_sup,mria_rlog_sup,mria_sup,<0.2155.0>], message_queue_len: 0, messages: , links: [<0.3180.0>], dictionary: [{‘$logger_metadata$’,#{domain => [mria,rlog,server],shard => ‘$mria_meta_shard’}}], trap_exit: true, status: running, heap_size: 4185, stack_size: 28, reductions: 6680; neighbours:

2023-10-23T06:45:19.448664+00:00 [error] Supervisor: {<0.3180.0>,mria_core_shard_sup}. Context: child_terminated. Reason: {{badmatch,{error,{node_not_running,‘emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local’}}},[{mria_rlog_server,process_schema,1,[{file,“mria_rlog_server.erl”},{line,233}]},{mria_rlog_server,handle_continue,2,[{file,“mria_rlog_server.erl”},{line,127}]},{gen_server,try_dispatch,4,[{file,“gen_server.erl”},{line,1123}]},{gen_server,loop,7,[{file,“gen_server.erl”},{line,865}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,240}]}]}. Offender: id=mria_rlog_server,pid=<0.3181.0>.

2023-10-23T06:45:19.448889+00:00 [error] Supervisor: {<0.3180.0>,mria_core_shard_sup}. Context: shutdown. Reason: reached_max_restart_intensity. Offender: id=mria_rlog_server,pid=<0.3181.0>.

2023-10-23T06:45:19.449004+00:00 [error] Supervisor: {local,mria_shards_sup}. Context: child_terminated. Reason: shutdown. Offender: id=‘$mria_meta_shard’,pid=<0.3180.0>.

2023-10-23T06:45:19.449128+00:00 [error] Generic server ‘$mria_meta_shard’ terminating. Reason: {{badmatch,{error,{node_not_running,‘emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local’}}},[{mria_rlog_server,process_schema,1,[{file,“mria_rlog_server.erl”},{line,233}]},{mria_rlog_server,handle_continue,2,[{file,“mria_rlog_server.erl”},{line,127}]},{gen_server,try_dispatch,4,[{file,“gen_server.erl”},{line,1123}]},{gen_server,loop,7,[{file,“gen_server.erl”},{line,865}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,240}]}]}. Last message: {continue,post_init}. State: {<0.3183.0>,‘$mria_meta_shard’}.

2023-10-23T06:45:19.449300+00:00 [error] crasher: initial call: mria_rlog_server:init/1, pid: <0.3184.0>, registered_name: ‘$mria_meta_shard’, error: {{badmatch,{error,{node_not_running,‘emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local’}}},[{mria_rlog_server,process_schema,1,[{file,“mria_rlog_server.erl”},{line,233}]},{mria_rlog_server,handle_continue,2,[{file,“mria_rlog_server.erl”},{line,127}]},{gen_server,try_dispatch,4,[{file,“gen_server.erl”},{line,1123}]},{gen_server,loop,7,[{file,“gen_server.erl”},{line,865}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,240}]}]}, ancestors: [<0.3183.0>,mria_shards_sup,mria_rlog_sup,mria_sup,<0.2155.0>], message_queue_len: 0, messages: , links: [<0.3183.0>], dictionary: [{‘$logger_metadata$’,#{domain => [mria,rlog,server],shard => ‘$mria_meta_shard’}}], trap_exit: true, status: running, heap_size: 4185, stack_size: 28, reductions: 6685; neighbours:

2023-10-23T06:45:19.449594+00:00 [error] Supervisor: {<0.3183.0>,mria_core_shard_sup}. Context: child_terminated. Reason: {{badmatch,{error,{node_not_running,‘emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local’}}},[{mria_rlog_server,process_schema,1,[{file,“mria_rlog_server.erl”},{line,233}]},{mria_rlog_server,handle_continue,2,[{file,“mria_rlog_server.erl”},{line,127}]},{gen_server,try_dispatch,4,[{file,“gen_server.erl”},{line,1123}]},{gen_server,loop,7,[{file,“gen_server.erl”},{line,865}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,240}]}]}. Offender: id=mria_rlog_server,pid=<0.3184.0>.

2023-10-23T06:45:19.449727+00:00 [error] Supervisor: {<0.3183.0>,mria_core_shard_sup}. Context: shutdown. Reason: reached_max_restart_intensity. Offender: id=mria_rlog_server,pid=<0.3184.0>.

2023-10-23T06:45:19.449809+00:00 [error] Supervisor: {local,mria_shards_sup}. Context: child_terminated. Reason: shutdown. Offender: id=‘$mria_meta_shard’,pid=<0.3183.0>.

2023-10-23T06:45:19.449940+00:00 [error] Generic server ‘$mria_meta_shard’ terminating. Reason: {{badmatch,{error,{node_not_running,‘emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local’}}},[{mria_rlog_server,process_schema,1,[{file,“mria_rlog_server.erl”},{line,233}]},{mria_rlog_server,handle_continue,2,[{file,“mria_rlog_server.erl”},{line,127}]},{gen_server,try_dispatch,4,[{file,“gen_server.erl”},{line,1123}]},{gen_server,loop,7,[{file,“gen_server.erl”},{line,865}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,240}]}]}. Last message: {continue,post_init}. State: {<0.3186.0>,‘$mria_meta_shard’}.

2023-10-23T06:45:19.450106+00:00 [error] crasher: initial call: mria_rlog_server:init/1, pid: <0.3187.0>, registered_name: ‘$mria_meta_shard’, error: {{badmatch,{error,{node_not_running,‘emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local’}}},[{mria_rlog_server,process_schema,1,[{file,“mria_rlog_server.erl”},{line,233}]},{mria_rlog_server,handle_continue,2,[{file,“mria_rlog_server.erl”},{line,127}]},{gen_server,try_dispatch,4,[{file,“gen_server.erl”},{line,1123}]},{gen_server,loop,7,[{file,“gen_server.erl”},{line,865}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,240}]}]}, ancestors: [<0.3186.0>,mria_shards_sup,mria_rlog_sup,mria_sup,<0.2155.0>], message_queue_len: 0, messages: , links: [<0.3186.0>], dictionary: [{‘$logger_metadata$’,#{domain => [mria,rlog,server],shard => ‘$mria_meta_shard’}}], trap_exit: true, status: running, heap_size: 4185, stack_size: 28, reductions: 6680; neighbours:

2023-10-23T06:45:19.450376+00:00 [error] Supervisor: {<0.3186.0>,mria_core_shard_sup}. Context: child_terminated. Reason: {{badmatch,{error,{node_not_running,‘emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local’}}},[{mria_rlog_server,process_schema,1,[{file,“mria_rlog_server.erl”},{line,233}]},{mria_rlog_server,handle_continue,2,[{file,“mria_rlog_server.erl”},{line,127}]},{gen_server,try_dispatch,4,[{file,“gen_server.erl”},{line,1123}]},{gen_server,loop,7,[{file,“gen_server.erl”},{line,865}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,240}]}]}. Offender: id=mria_rlog_server,pid=<0.3187.0>.

2023-10-23T06:45:19.450498+00:00 [error] Supervisor: {<0.3186.0>,mria_core_shard_sup}. Context: shutdown. Reason: reached_max_restart_intensity. Offender: id=mria_rlog_server,pid=<0.3187.0>.

2023-10-23T06:45:19.450582+00:00 [error] Supervisor: {local,mria_shards_sup}. Context: child_terminated. Reason: shutdown. Offender: id=‘$mria_meta_shard’,pid=<0.3186.0>.

2023-10-23T06:45:19.450712+00:00 [error] Generic server ‘$mria_meta_shard’ terminating. Reason: {{badmatch,{error,{node_not_running,‘emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local’}}},[{mria_rlog_server,process_schema,1,[{file,“mria_rlog_server.erl”},{line,233}]},{mria_rlog_server,handle_continue,2,[{file,“mria_rlog_server.erl”},{line,127}]},{gen_server,try_dispatch,4,[{file,“gen_server.erl”},{line,1123}]},{gen_server,loop,7,[{file,“gen_server.erl”},{line,865}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,240}]}]}. Last message: {continue,post_init}. State: {<0.3189.0>,‘$mria_meta_shard’}.

2023-10-23T06:45:19.450887+00:00 [error] crasher: initial call: mria_rlog_server:init/1, pid: <0.3190.0>, registered_name: ‘$mria_meta_shard’, error: {{badmatch,{error,{node_not_running,‘emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local’}}},[{mria_rlog_server,process_schema,1,[{file,“mria_rlog_server.erl”},{line,233}]},{mria_rlog_server,handle_continue,2,[{file,“mria_rlog_server.erl”},{line,127}]},{gen_server,try_dispatch,4,[{file,“gen_server.erl”},{line,1123}]},{gen_server,loop,7,[{file,“gen_server.erl”},{line,865}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,240}]}]}, ancestors: [<0.3189.0>,mria_shards_sup,mria_rlog_sup,mria_sup,<0.2155.0>], message_queue_len: 0, messages: , links: [<0.3189.0>], dictionary: [{‘$logger_metadata$’,#{domain => [mria,rlog,server],shard => ‘$mria_meta_shard’}}], trap_exit: true, status: running, heap_size: 4185, stack_size: 28, reductions: 6680; neighbours:

2023-10-23T06:45:19.451195+00:00 [error] Supervisor: {<0.3189.0>,mria_core_shard_sup}. Context: child_terminated. Reason: {{badmatch,{error,{node_not_running,‘emqx@emqx-core-d759479f9-0.emqx-headless.operation-platform.svc.cluster.local’}}},[{mria_rlog_server,process_schema,1,[{file,“mria_rlog_server.erl”},{line,233}]},{mria_rlog_server,handle_continue,2,[{file,“mria_rlog_server.erl”},{line,127}]},{gen_server,try_dispatch,4,[{file,“gen_server.erl”},{line,1123}]},{gen_server,loop,7,[{file,“gen_server.erl”},{line,865}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,240}]}]}. Offender: id=mria_rlog_server,pid=<0.3190.0>.

2023-10-23T06:45:19.451324+00:00 [error] Supervisor: {<0.3189.0>,mria_core_shard_sup}. Context: shutdown. Reason: reached_max_restart_intensity. Offender: id=mria_rlog_server,pid=<0.3190.0>.

2023-10-23T06:45:19.451404+00:00 [error] Supervisor: {local,mria_shards_sup}. Context: child_terminated. Reason: shutdown. Offender: id=‘$mria_meta_shard’,pid=<0.3189.0>.

2023-10-23T06:45:19.451530+00:00 [error] Supervisor: {local,mria_shards_sup}. Context: shutdown. Reason: reached_max_restart_intensity. Offender: id=‘$mria_meta_shard’,pid=<0.3189.0>.

2023-10-23T06:45:19.451633+00:00 [error] Supervisor: {local,mria_rlog_sup}. Context: child_terminated. Reason: shutdown. Offender: id=mria_shards_sup,pid=<0.2888.0>.

2023-10-23T06:45:19.451715+00:00 [error] Supervisor: {local,mria_rlog_sup}. Context: shutdown. Reason: reached_max_restart_intensity. Offender: id=mria_shards_sup,pid=<0.2888.0>.

2023-10-23T06:45:19.451798+00:00 [error] Supervisor: {local,mria_sup}. Context: child_terminated. Reason: shutdown. Offender: id=mria_rlog_sup,pid=<0.2267.0>.

2023-10-23T06:45:19.451884+00:00 [error] Supervisor: {local,mria_sup}. Context: shutdown. Reason: reached_max_restart_intensity. Offender: id=mria_rlog_sup,pid=<0.2267.0>.

Received terminate signal, shutting down now

你上面的这些 log 是哪个 Pod 打印的?还有 Get Pod 的 json 麻烦发一下

上面的log日志是emqx-0打印的

[root@master01 emqx]# kubectl get pods emqx-core-d759479f9-0 -n operation-platform -o json

{

“apiVersion”: “v1”,

“kind”: “Pod”,

“metadata”: {

“annotations”: {

“cni.projectcalico.org/podIP”: “",

“cni.projectcalico.org/podIPs”: "",

“kubectl.kubernetes.io/default-container”: “emqx”,

“kubectl.kubernetes.io/default-logs-container”: “emqx”,

“prometheus.io/path”: “/stats/prometheus”,

“prometheus.io/port”: “15020”,

“prometheus.io/scrape”: “true”,

“sidecar.istio.io/status”: “{"initContainers":["istio-init"],"containers":["istio-proxy"],"volumes":["workload-socket","credential-socket","workload-certs","istio-envoy","istio-data","istio-podinfo","istio-token","istiod-ca-cert"],"imagePullSecrets":null,"revision":"default"}”

},

“creationTimestamp”: “2023-10-23T06:30:06Z”,

“generateName”: “emqx-core-d759479f9-”,

“labels”: {

“apps.emqx.io/db-role”: “core”,

“apps.emqx.io/instance”: “emqx”,

“apps.emqx.io/managed-by”: “emqx-operator”,

“apps.emqx.io/pod-template-hash”: “d759479f9”,

“controller-revision-hash”: “emqx-core-d759479f9-86d8c8c556”,

“security.istio.io/tlsMode”: “istio”,

“service.istio.io/canonical-name”: “emqx-core-d759479f9”,

“service.istio.io/canonical-revision”: “latest”,

“statefulset.kubernetes.io/pod-name”: “emqx-core-d759479f9-0”

},

“name”: “emqx-core-d759479f9-0”,

“namespace”: “operation-platform”,

“ownerReferences”: [

{

“apiVersion”: “apps/v1”,

“blockOwnerDeletion”: true,

“controller”: true,

“kind”: “StatefulSet”,

“name”: “emqx-core-d759479f9”,

“uid”: “f7c1f230-cddf-4040-a22-63e05a7937e”

}

],

“resourceVersion”: “75693572”,

“selfLink”: “/api/v1/namespaces/operation-platform/pods/emqx-core-d759479f9-0”,

“uid”: “f7a2d1d-3cbc-4569-81c7-ab10c045f”

},

“spec”: {

“containers”: [

{

“env”: [

{

“name”: “EMQX_DASHBOARD__LISTENERS__HTTP__BIND”,

“value”: “18083”

},

{

“name”: “POD_NAME”,

“valueFrom”: {

“fieldRef”: {

“apiVersion”: “v1”,

“fieldPath”: “metadata.name”

}

}

},

{

“name”: “EMQX_CLUSTER__DISCOVERY_STRATEGY”,

“value”: “dns”

},

{

“name”: “EMQX_CLUSTER__DNS__RECORD_TYPE”,

“value”: “srv”

},

{

“name”: “EMQX_CLUSTER__DNS__NAME”,

“value”: “emqx-headless.operation-platform.svc.cluster.local”

},

{

“name”: “EMQX_HOST”,

“value”: “$(POD_NAME).$(EMQX_CLUSTER__DNS__NAME)”

},

{

“name”: “EMQX_NODE__DATA_DIR”,

“value”: “data”

},

{

“name”: “EMQX_NODE__ROLE”,

“value”: “core”

},

{

“name”: “EMQX_NODE__COOKIE”,

“valueFrom”: {

“secretKeyRef”: {

“key”: “node_cookie”,

“name”: “emqx-node-cookie”

}

}

},

{

“name”: “EMQX_API_KEY__BOOTSTRAP_FILE”,

“value”: “"/opt/emqx/data/bootstrap_api_key"”

}

],

“image”: “emqx:5.1”,

“imagePullPolicy”: “IfNotPresent”,

“livenessProbe”: {

“failureThreshold”: 3,

“httpGet”: {

“path”: “/app-health/emqx/livez”,

“port”: 15020,

“scheme”: “HTTP”

},

“initialDelaySeconds”: 60,

“periodSeconds”: 30,

“successThreshold”: 1,

“timeoutSeconds”: 1

},

“name”: “emqx”,

“ports”: [

{

“containerPort”: 18083,

“name”: “dashboard”,

“protocol”: “TCP”

}

],

“readinessProbe”: {

“failureThreshold”: 12,

“httpGet”: {

“path”: “/app-health/emqx/readyz”,

“port”: 15020,

“scheme”: “HTTP”

},

“initialDelaySeconds”: 10,

“periodSeconds”: 5,

“successThreshold”: 1,

“timeoutSeconds”: 1

},

“resources”: {},

“securityContext”: {

“runAsGroup”: 1000,

“runAsNonRoot”: true,

“runAsUser”: 1000

},

“terminationMessagePath”: “/dev/termination-log”,

“terminationMessagePolicy”: “File”,

“volumeMounts”: [

{

“mountPath”: “/opt/emqx/data/bootstrap_api_key”,

“name”: “bootstrap-api-key”,

“readOnly”: true,

“subPath”: “bootstrap_api_key”

},

{

“mountPath”: “/opt/emqx/etc/emqx.conf”,

“name”: “bootstrap-config”,

“readOnly”: true,

“subPath”: “emqx.conf”

},

{

“mountPath”: “/opt/emqx/log”,

“name”: “emqx-core-log”

},

{

“mountPath”: “/opt/emqx/data”,

“name”: “emqx-core-data”

},

{

“mountPath”: “/var/run/secrets/kubernetes.io/serviceaccount”,

“name”: “kube-api-access-s78vn”,

“readOnly”: true

}

]

},

{

“args”: [

“proxy”,

“sidecar”,

“–domain”,

“$(POD_NAMESPACE).svc.cluster.local”,

“–proxyLogLevel=warning”,

“–proxyComponentLogLevel=misc:error”,

“–log_output_level=default:info”,

“–concurrency”,

“2”

],

“env”: [

{

“name”: “JWT_POLICY”,

“value”: “third-party-jwt”

},

{

“name”: “PILOT_CERT_PROVIDER”,

“value”: “istiod”

},

{

“name”: “CA_ADDR”,

“value”: “istiod.istio-system.svc:15012”

},

{

“name”: “POD_NAME”,

“valueFrom”: {

“fieldRef”: {

“apiVersion”: “v1”,

“fieldPath”: “metadata.name”

}

}

},

{

“name”: “POD_NAMESPACE”,

“valueFrom”: {

“fieldRef”: {

“apiVersion”: “v1”,

“fieldPath”: “metadata.namespace”

}

}

},

{

“name”: “INSTANCE_IP”,

“valueFrom”: {

“fieldRef”: {

“apiVersion”: “v1”,

“fieldPath”: “status.podIP”

}

}

},

{

“name”: “SERVICE_ACCOUNT”,

“valueFrom”: {

“fieldRef”: {

“apiVersion”: “v1”,

“fieldPath”: “spec.serviceAccountName”

}

}

},

{

“name”: “HOST_IP”,

“valueFrom”: {

“fieldRef”: {

“apiVersion”: “v1”,

“fieldPath”: “status.hostIP”

}

}

},

{

“name”: “PROXY_CONFIG”,

“value”: “{}\n”

},

{

“name”: “ISTIO_META_POD_PORTS”,

“value”: “[\n {"name":"dashboard","containerPort":18083,"protocol":"TCP"}\n]”

},

{

“name”: “ISTIO_META_APP_CONTAINERS”,

“value”: “emqx”

},

{

“name”: “ISTIO_META_CLUSTER_ID”,

“value”: “Kubernetes”

},

{

“name”: “ISTIO_META_NODE_NAME”,

“valueFrom”: {

“fieldRef”: {

“apiVersion”: “v1”,

“fieldPath”: “spec.nodeName”

}

}

},

{

“name”: “ISTIO_META_INTERCEPTION_MODE”,

“value”: “REDIRECT”

},

{

“name”: “ISTIO_META_WORKLOAD_NAME”,

“value”: “emqx-core-d759479f9”

},

{

“name”: “ISTIO_META_OWNER”,

“value”: “kubernetes://apis/apps/v1/namespaces/operation-platform/statefulsets/emqx-core-d759479f9”

},

{

“name”: “ISTIO_META_MESH_ID”,

“value”: “cluster.local”

},

{

“name”: “TRUST_DOMAIN”,

“value”: “cluster.local”

},

{

“name”: “ISTIO_KUBE_APP_PROBERS”,

“value”: “{"/app-health/emqx/livez":{"httpGet":{"path":"/status","port":18083,"scheme":"HTTP"},"timeoutSeconds":1},"/app-health/emqx/readyz":{"httpGet":{"path":"/status","port":18083,"scheme":"HTTP"},"timeoutSeconds":1}}”

}

],

“image”: “docker.io/istio/proxyv2:1.17.1”,

“imagePullPolicy”: “IfNotPresent”,

“name”: “istio-proxy”,

“ports”: [

{

“containerPort”: 15090,

“name”: “http-envoy-prom”,

“protocol”: “TCP”

}

],

“readinessProbe”: {

“failureThreshold”: 30,

“httpGet”: {

“path”: “/healthz/ready”,

“port”: 15021,

“scheme”: “HTTP”

},

“initialDelaySeconds”: 1,

“periodSeconds”: 2,

“successThreshold”: 1,

“timeoutSeconds”: 3

},

“resources”: {

“limits”: {

“cpu”: “2”,

“memory”: “1Gi”

},

“requests”: {

“cpu”: “100m”,

“memory”: “128Mi”

}

},

“securityContext”: {

“allowPrivilegeEscalation”: false,

“capabilities”: {

“drop”: [

“ALL”

]

},

“privileged”: false,

“readOnlyRootFilesystem”: true,

“runAsGroup”: 1337,

“runAsNonRoot”: true,

“runAsUser”: 1337

},

“terminationMessagePath”: “/dev/termination-log”,

“terminationMessagePolicy”: “File”,

“volumeMounts”: [

{

“mountPath”: “/var/run/secrets/workload-spiffe-uds”,

“name”: “workload-socket”

},

{

“mountPath”: “/var/run/secrets/credential-uds”,

“name”: “credential-socket”

},

{

“mountPath”: “/var/run/secrets/workload-spiffe-credentials”,

“name”: “workload-certs”

},

{

“mountPath”: “/var/run/secrets/istio”,

“name”: “istiod-ca-cert”

},

{

“mountPath”: “/var/lib/istio/data”,

“name”: “istio-data”

},

{

“mountPath”: “/etc/istio/proxy”,

“name”: “istio-envoy”

},

{

“mountPath”: “/var/run/secrets/tokens”,

“name”: “istio-token”

},

{

“mountPath”: “/etc/istio/pod”,

“name”: “istio-podinfo”

},

{

“mountPath”: “/var/run/secrets/kubernetes.io/serviceaccount”,

“name”: “kube-api-access-s78vn”,

“readOnly”: true

}

]

}

],

“dnsPolicy”: “ClusterFirst”,

“enableServiceLinks”: true,

“hostname”: “emqx-core-d759479f9-0”,

“initContainers”: [

{

“args”: [

“istio-iptables”,

“-p”,

“15001”,

“-z”,

“15006”,

“-u”,

“1337”,

“-m”,

“REDIRECT”,

“-i”,

"”,

“-x”,

“”,

“-b”,

“*”,

“-d”,

“15090,15021,15020”,

“–log_output_level=default:info”

],

“image”: “docker.io/istio/proxyv2:1.17.1”,

“imagePullPolicy”: “IfNotPresent”,

“name”: “istio-init”,

“resources”: {

“limits”: {

“cpu”: “2”,

“memory”: “1Gi”

},

“requests”: {

“cpu”: “100m”,

“memory”: “128Mi”

}

},

“securityContext”: {

“allowPrivilegeEscalation”: false,

“capabilities”: {

“add”: [

“NET_ADMIN”,

“NET_RAW”

],

“drop”: [

“ALL”

]

},

“privileged”: false,

“readOnlyRootFilesystem”: false,

“runAsGroup”: 0,

“runAsNonRoot”: false,

“runAsUser”: 0

},

“terminationMessagePath”: “/dev/termination-log”,

“terminationMessagePolicy”: “File”,

“volumeMounts”: [

{

“mountPath”: “/var/run/secrets/kubernetes.io/serviceaccount”,

“name”: “kube-api-access-s78vn”,

“readOnly”: true

}

]

}

],

“nodeName”: “node01”,

“preemptionPolicy”: “PreemptLowerPriority”,

“priority”: 0,

“readinessGates”: [

{

“conditionType”: “apps.emqx.io/on-serving”

}

],

“restartPolicy”: “Always”,

“schedulerName”: “default-scheduler”,

“securityContext”: {

“fsGroup”: 1000,

“fsGroupChangePolicy”: “Always”,

“runAsGroup”: 1000,

“runAsUser”: 1000,

“supplementalGroups”: [

1000

]

},

“serviceAccount”: “default”,

“serviceAccountName”: “default”,

“subdomain”: “emqx-headless”,

“terminationGracePeriodSeconds”: 30,

“tolerations”: [

{

“effect”: “NoExecute”,

“key”: “node.kubernetes.io/not-ready”,

“operator”: “Exists”,

“tolerationSeconds”: 300

},

{

“effect”: “NoExecute”,

“key”: “node.kubernetes.io/unreachable”,

“operator”: “Exists”,

“tolerationSeconds”: 300

}

],

“volumes”: [

{

“emptyDir”: {},

“name”: “workload-socket”

},

{

“emptyDir”: {},

“name”: “credential-socket”

},

{

“emptyDir”: {},

“name”: “workload-certs”

},

{

“emptyDir”: {

“medium”: “Memory”

},

“name”: “istio-envoy”

},

{

“emptyDir”: {},

“name”: “istio-data”

},

{

“downwardAPI”: {

“defaultMode”: 420,

“items”: [

{

“fieldRef”: {

“apiVersion”: “v1”,

“fieldPath”: “metadata.labels”

},

“path”: “labels”

},

{

“fieldRef”: {

“apiVersion”: “v1”,

“fieldPath”: “metadata.annotations”

},

“path”: “annotations”

}

]

},

“name”: “istio-podinfo”

},

{

“name”: “istio-token”,

“projected”: {

“defaultMode”: 420,

“sources”: [

{

“serviceAccountToken”: {

“audience”: “istio-ca”,

“expirationSeconds”: 43200,

“path”: “istio-token”

}

}

]

}

},

{

“configMap”: {

“defaultMode”: 420,

“name”: “istio-ca-root-cert”

},

“name”: “istiod-ca-cert”

},

{

“name”: “emqx-core-data”,

“persistentVolumeClaim”: {

“claimName”: “emqx-core-data-emqx-core-d759479f9-0”

}

},

{

“name”: “bootstrap-api-key”,

“secret”: {

“defaultMode”: 420,

“secretName”: “emqx-bootstrap-api-key”

}

},

{

“configMap”: {

“defaultMode”: 420,

“name”: “emqx-configs”

},

“name”: “bootstrap-config”

},

{

“emptyDir”: {},

“name”: “emqx-core-log”

},

{

“name”: “kube-api-access-s78vn”,

“projected”: {

“defaultMode”: 420,

“sources”: [

{

“serviceAccountToken”: {

“expirationSeconds”: 3607,

“path”: “token”

}

},

{

“configMap”: {

“items”: [

{

“key”: “ca.crt”,

“path”: “ca.crt”

}

],

“name”: “kube-root-ca.crt”

}

},

{

“downwardAPI”: {

“items”: [

{

“fieldRef”: {

“apiVersion”: “v1”,

“fieldPath”: “metadata.namespace”

},

“path”: “namespace”

}

]

}

}

]

}

}

]

},

“status”: {

“conditions”: [

{

“lastProbeTime”: “2023-10-23T06:51:37Z”,

“lastTransitionTime”: “2023-10-23T06:51:37Z”,

“status”: “False”,

“type”: “apps.emqx.io/on-serving”

},

{

“lastProbeTime”: null,

“lastTransitionTime”: “2023-10-23T06:30:11Z”,

“status”: “True”,

“type”: “Initialized”

},

{

“lastProbeTime”: null,

“lastTransitionTime”: “2023-10-23T06:30:06Z”,

“message”: “containers with unready status: [emqx]”,

“reason”: “ContainersNotReady”,

“status”: “False”,

“type”: “Ready”

},

{

“lastProbeTime”: null,

“lastTransitionTime”: “2023-10-23T06:30:06Z”,

“message”: “containers with unready status: [emqx]”,

“reason”: “ContainersNotReady”,

“status”: “False”,

“type”: “ContainersReady”

},

{

“lastProbeTime”: null,

“lastTransitionTime”: “2023-10-23T06:30:06Z”,

“status”: “True”,

“type”: “PodScheduled”

}

],

“containerStatuses”: [

{

“containerID”: “docker://45eac684e29c00191f9955a6404af537dabd0b30e09adc361d29359d5c8f3c9a”,

“image”: “emqx:5.1”,

“imageID”: “docker-pullable://emqx@sha256:3f072d66765dbd8dfc5de99d82e3ff153ef62bd887a2d77ad95033c3b0098f3b”,

“lastState”: {

“terminated”: {

“containerID”: “docker://6c1de2d3c2f429da4419787f3c07c77865171ca05d2297704c6b199562d16365”,

“exitCode”: 0,

“finishedAt”: “2023-10-23T06:47:37Z”,

“reason”: “Completed”,

“startedAt”: “2023-10-23T06:45:07Z”

}

},

“name”: “emqx”,

“ready”: false,

“restartCount”: 7,

“started”: true,

“state”: {

“running”: {

“startedAt”: “2023-10-23T06:50:24Z”

}

}

},

{

“containerID”: “docker://b033d07f20d5b02a7433fcdad21d77593f8f0540c61b310e873af9c15571fbc9”,

“image”: “istio/proxyv2:1.17.1”,

“imageID”: “docker-pullable://istio/proxyv2@sha256:52aea5fbe2de20f08f3e0412ad7a4cd54a492240ff40974261ee4bdb43871d”,

“lastState”: {},

“name”: “istio-proxy”,

“ready”: true,

“restartCount”: 0,

“started”: true,

“state”: {

“running”: {

“startedAt”: “2023-10-23T06:30:11Z”

}

}

}

],

“hostIP”: “10.206.38.9”,

“initContainerStatuses”: [

{

“containerID”: “docker://afc05f99637c6e80caeba26987a42ae17e3ba0785dbc46844e7a25822072b”,

“image”: “istio/proxyv2:1.17.1”,

“imageID”: “docker-pullable://istio/proxyv2@sha256:21aea5fbe2de20f08f3e0412ad7a4cd54a492240ff40974261ee4bdb43871d”,

“lastState”: {},

“name”: “istio-init”,

“ready”: true,

“restartCount”: 0,

“state”: {

“terminated”: {

“containerID”: “docker://afc05f99637c6e80caeba26987a744d2ae17e3ba0785dbc46844e7a25822072b”,

“exitCode”: 0,

“finishedAt”: “2023-10-23T06:30:10Z”,

“reason”: “Completed”,

“startedAt”: “2023-10-23T06:30:10Z”

}

}

}

],

“phase”: “Running”,

“podIP”: “10.244.196.134”,

“podIPs”: [

{

“ip”: “10.244.196.88”

}

],

“qosClass”: “Burstable”,

“startTime”: “2023-10-23T06:30:06Z”

}

}

我注意到你部署了 istio,你可以将 4370 和 5369 两个端口加入 ISTIO_META_POD_PORTS 并重新部署试试么?