版本:EMQX 版本4.4.18

部署方式:zip

集群节点:4

操作系统及服务器数量:1台CentOS7,3台CentOS8

认证方式:redis

优化参数:按照文档中linux系统调优已进行调优

问题:集群四个节点,单机单节点仅【连接】压测可达到6W连接且不出现断开连接重连情况。部署为集群+负载均衡后,进行【连接】压测时在1000+会出现断开连接且重新连接,当【连接】压测连接数为1W时出现断开连接且重新连接超过次数后不在进行连接。

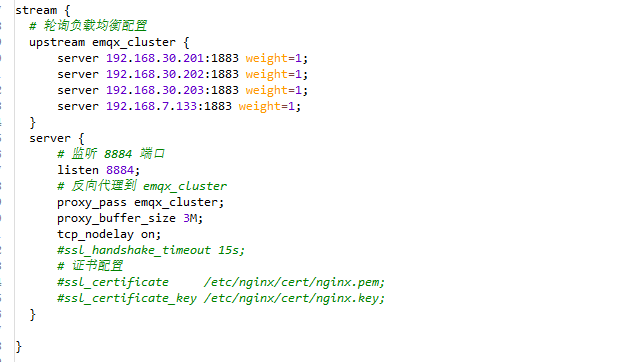

负载均衡配置

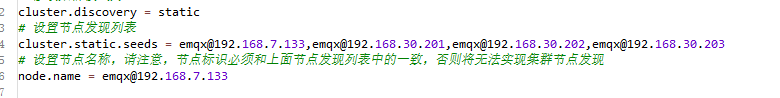

emqx配置文件

info.log

2023-06-27T09:00:42.629598+08:00 [info] mqttx-a-81@192.168.7.133:44512 file: emqx_connection.erl, line: 544, mfa: {emqx_connection,terminate,2}, msg: terminate, pid: <0.10317.3>, reason: {shutdown,tcp_closed}

2023-06-27T09:00:42.630212+08:00 [info] mqttx-a-92@192.168.7.133:44556 file: emqx_connection.erl, line: 544, mfa: {emqx_connection,terminate,2}, msg: terminate, pid: <0.10333.3>, reason: {shutdown,tcp_closed}

2023-06-27T09:00:42.631249+08:00 [info] mqttx-a-113@192.168.7.133:44640 file: emqx_connection.erl, line: 544, mfa: {emqx_connection,terminate,2}, msg: terminate, pid: <0.10362.3>, reason: {shutdown,tcp_closed}

2023-06-27T09:00:42.631198+08:00 [info] mqttx-a-134@192.168.7.133:44724 file: emqx_connection.erl, line: 544, mfa: {emqx_connection,terminate,2}, msg: terminate, pid: <0.10393.3>, reason: {shutdown,tcp_closed}

2023-06-27T09:00:42.631049+08:00 [info] mqttx-a-105@192.168.7.133:44608 file: emqx_connection.erl, line: 544, mfa: {emqx_connection,terminate,2}, msg: terminate, pid: <0.10352.3>, reason: {shutdown,tcp_closed}

2023-06-27T09:00:42.631175+08:00 [info] mqttx-a-180@192.168.7.133:44908 file: emqx_connection.erl, line: 544, mfa: {emqx_connection,terminate,2}, msg: terminate, pid: <0.10439.3>, reason: {shutdown,tcp_closed}

2023-06-27T09:00:42.631552+08:00 [info] mqttx-a-117@192.168.7.133:44656 file: emqx_connection.erl, line: 544, mfa: {emqx_connection,terminate,2}, msg: terminate, pid: <0.10365.3>, reason: {shutdown,tcp_closed}

2023-06-27T09:00:42.631467+08:00 [info] mqttx-a-163@192.168.7.133:44840 file: emqx_connection.erl, line: 544, mfa: {emqx_connection,terminate,2}, msg: terminate, pid: <0.10419.3>, reason: {shutdown,tcp_closed}

2023-06-27T09:00:42.631407+08:00 [info] mqttx-a-201@192.168.7.133:44992 file: emqx_connection.erl, line: 544, mfa: {emqx_connection,terminate,2}, msg: terminate, pid: <0.10462.3>, reason: {shutdown,tcp_closed}

2023-06-27T09:00:42.631811+08:00 [info] mqttx-a-317@192.168.7.133:45456 file: emqx_connection.erl, line: 544, mfa: {emqx_connection,terminate,2}, msg: terminate, pid: <0.10624.3>, reason: {shutdown,tcp_closed}