emqx版本:开源 版本5.8.8

测试过程:

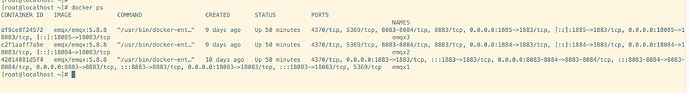

1、一台服务器部署emqx集群(分别为emqx1/1883 emqx2/1884 emqx3 /1885),每个节点资源都是8核 32GB;

2、通过emqtt_bench给集群打数据,emqx1消息流入速率2w/s emqx2消息流入速率2w/s emqx3消息流入速率2w/s ;

3、启动订阅端消费消息:

./emqtt_bench sub -h 192.168.100.162 -p 1883 -k 300 -q 1 -t ‘v1/+/host/+/data’ -c 15

./emqtt_bench sub -h 192.168.100.162 -p 1884 -k 300 -q 1 -t ‘v1/+/host/+/data’ -c 15

./emqtt_bench sub -h 192.168.100.162 -p 1885 -k 300 -q 1 -t ‘v1/+/host/+/data’ -c 15

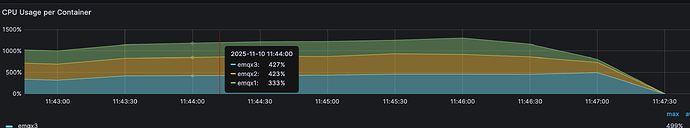

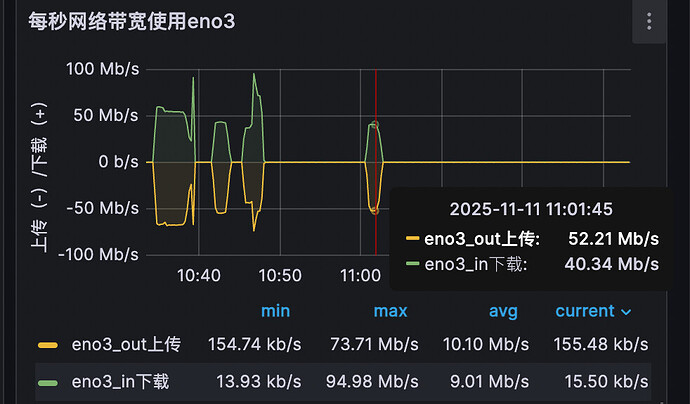

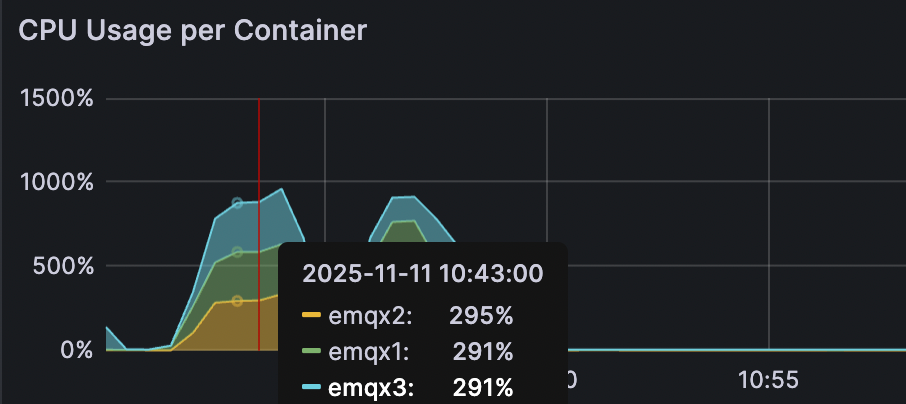

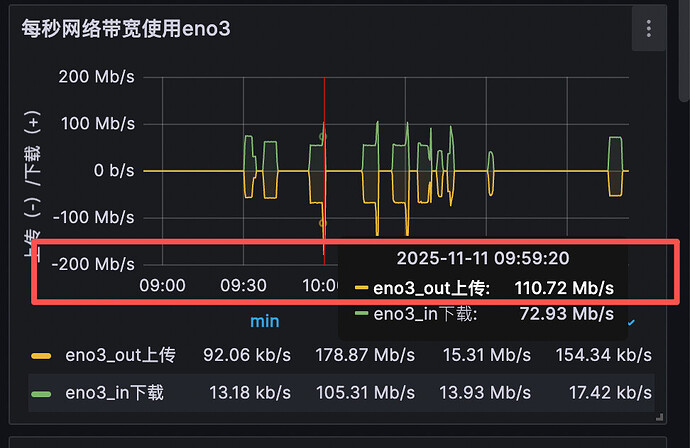

4、每个节点的资源占用如下:

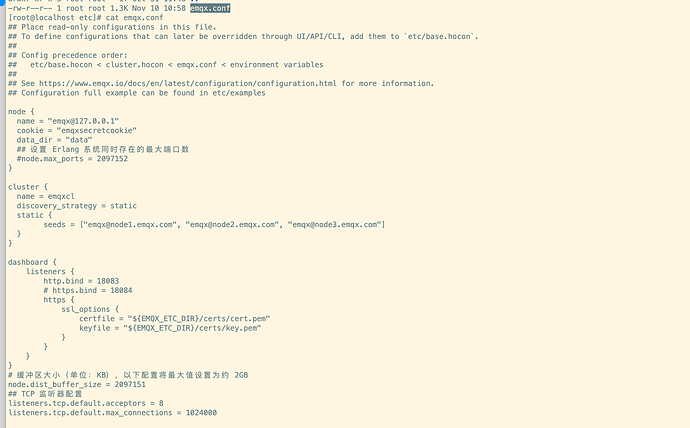

5、配置文件如下:

6、日志报错如下:

2025-11-10T11:45:28.837426+08:00 [error] Supervisor: {local,gen_rpc_client_sup}. Context: child_terminated. Reason: {badtcp,timeout}. Offender: id=gen_rpc_client,pid=<0.15361402.0>.

2025-11-10T11:45:29.210808+08:00 [error] event=send_async_failed socket=“#Port<0.4778>” reason=“timeout”

2025-11-10T11:45:29.211016+08:00 [error] msg: gen_rpc_error, error: transmission_failed, driver: tcp, packet: cast, reason: {badtcp,timeout}, socket: #Port<0.4778>

2025-11-10T11:45:29.211286+08:00 [error] Generic server {client,{‘emqx@node3.emqx.com’,5}} terminating. Reason: {badtcp,timeout}. Last message: {{oc,emqx_broker,dispatch,[<<“v1/+/host/+/data”>>,{delivery,<0.12290921.0>,{message,<<0,6,67,53,92,115,204,72,49,140,0,187,139,105,23,37>>,1,<<“localhost_bench_pub_1945684490_541”>>,#{dup => false,retain => false},#{peername => {{192,168,100,163},48678},protocol => mqtt,username => undefined,peerhost => {192,168,100,163},properties => #{},proto_ver => 5,client_attrs => #{}},<<“v1/device/host/guid/data”>>,<<“{”>>,1762746323684,#{}}}]},undefined}. State: {state,#Port<0.4778>,tcp,gen_rpc_driver_tcp,tcp_closed,tcp_error,256,{keepalive,#Fun<gen_rpc_client.3.129353779>,82,60,{keepalive,check},#Ref<0.1528632153.2407530498.89826>,0}}.

2025-11-10T11:45:29.319153+08:00 [error] crasher: initial call: gen_rpc_client:init/1, pid: <0.15361801.0>, registered_name: , exit: {{badtcp,timeout},[{gen_server,handle_common_reply,8,[{file,“gen_server.erl”},{line,1226}]},{proc_lib,init_p_do_apply,3,[{file,“proc_lib.erl”},{line,241}]}]}, ancestors: [gen_rpc_client_sup,gen_rpc_sup,<0.2218.0>], message_queue_len: 17206, messages: [{{oc,emqx_broker,dispatch,[<<“v1/+/host/+/data”>>,{delivery,<0.12289869.0>,{message,<<0,6,67,53,92,115,230,20,49,140,0,187,135,77,23,62>>,1,<<“localhost_bench_pub_1882175208_864”>>,#{dup => false,retain => false},#{peername => {{192,168,100,163},48274},protocol => mqtt,username => undefined,peerhost => {192,168,100,163},properties => #{},proto_ver => 5,client_attrs => #{}},<<“v1/device/host/guid/data”>>,<<“{”>>,1762746323691,#{}}}]},undefined},{{oc,emqx_broker,dispatch,[<<“v1/+/host/+/data”>>,{delivery,<0.12334289.0>,{message,<<0,6,67,53,92,115,230,60,49,140,0,188,52,209,22,198>>,1,<<“localhost_bench_pub_415187749_27”>>,#{dup => false,retain => false},#{peername => {{192,168,100,163},51320},protocol => mqtt,username => undefined,peerhost => {192,168,100,163},properties => #{},proto_ver => 5,client_attrs => #{}},<<“v1/device/host/guid/data”>>,<<“{”>>,1762746323691,#{}}}]},undefined},{{oc,emqx_broker,dispatch,[<<“v1/+/host/+/data”>>,{delivery,<0.12290893.0>,{message,<<0,6,67,53,92,115,230,82,49,140,0,187,139,77,23,36>>,1,<<“localhost_bench_pub_3019818464_254”>>,#{dup => false,retain => false},#{peername => {{192,168,100,163},48636},protocol => mqtt,username => undefined,peerhost => {192,168,100,163},properties => #{},proto_ver => 5,client_attrs => #{}},<<“v1/device/host/guid/data”>>,<<“{”>>,1762746323691,#{}}}]},undefined},{{oc,emqx_broker,dispatch,[<<“v1/+/host/+/data”>>,{delivery,<0.12319665.0>,{message,<<0,6,67,53,92,115,230,89,49,140,0,187,251,177,22,220>>,1,<<“localhost_bench_pub_280497934_187”>>,#{dup => false,retain => false},#{peername => {{192,168,100,163},50734},protocol => mqtt,username => undefined,peerhost => {192,168,100,163},properties => #{},proto_ver => 5,client_attrs => #{}},<<“v1/device/host/guid/data”>>,<<“{”>>,1762746323691,#{}}}]},undefined},{{oc,emqx_broker,dispatch,[<<“v1/+/host/+/data”>>,{delivery,<0.12334307.0>,{message,<<0,6,67,53,92,115,230,140,49,140,0,188,52,227,22,191>>,1,<<"localhost_bench_p

请问如何解决?