node {

name = "emqx@172.31.195.122"

cookie = "emqxsecretcookie"

data_dir = "data"

role = replicant

process_limit = 2097152

max_ports = 1048576

}

##cluster {

## discovery_strategy = static

## static {

## seeds = ["emqx@172.31.195.122", "emqx@172.31.195.121"]

## }

##}

cluster {

discovery_strategy = etcd

etcd {

server = "http://172.31.195.121:2379,http://172.31.195.122:2379"

prefix = emqx-center

node_ttl = 1m

}

}

## EMQX provides support for two primary log handlers: `file` and `console`, with an additional `audit` handler specifically designed to always direct logs to files.

## The system's default log handling behavior can be configured via the environment variable `EMQX_DEFAULT_LOG_HANDLER`, which accepts the following settings:

##

## - `file`: Directs log output exclusively to files.

## - `console`: Channels log output solely to the console.

##

## It's noteworthy that `EMQX_DEFAULT_LOG_HANDLER` is set to `file` when EMQX is initiated via systemd `emqx.service` file.

## In scenarios outside systemd initiation, `console` serves as the default log handler.

## Read more about configs here: https://www.emqx.io/docs/en/latest/configuration/logs.html

log {

file {

level = debug

}

console {

level = debug

}

}

listeners.tcp.default {

bind = "0.0.0.0:2883"

max_connections = 1024000

}

listeners.wss.default {

bind = "0.0.0.0:8884"

max_connections = 1024000

websocket.mqtt_path = "/mqtt"

ssl_options {

cacertfile = "etc/certs/cacert.pem"

certfile = "etc/certs/cert.pem"

keyfile = "etc/certs/key.pem"

}

}

listeners.ws.default {

bind = "0.0.0.0:8083"

max_connections = 1024000

websocket.mqtt_path = "/mqtt"

}

listeners.ssl.default {

bind = "0.0.0.0:8883"

max_connections = 1024000

ssl_options {

cacertfile = "etc/certs/cacert.pem"

certfile = "etc/certs/cert.pem"

keyfile = "etc/certs/key.pem"

verify = verify_none

fail_if_no_peer_cert = false

}

}

dashboard {

listeners.http {

bind = 18083

}

default_username = "admin"

default_password = "1qaz@wsx"

}

log

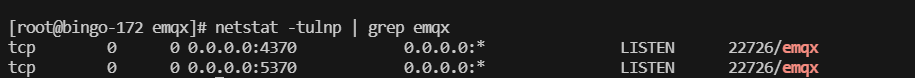

2024-12-18T14:51:50.850162+08:00 [info] event=server_setup_successfully driver=tcp port=5370 socket="#Port<0.4>"

2024-12-18T14:51:50.850416+08:00 [info] msg: gen_rpc_dispatcher_start

2024-12-18T14:51:50.851680+08:00 [notice] msg: Starting mria

2024-12-18T14:51:50.852059+08:00 [notice] msg: Starting mnesia

2024-12-18T14:51:50.852138+08:00 [debug] msg: Ensure mnesia schema

2024-12-18T14:51:50.853631+08:00 [notice] msg: Creating new mnesia schema, result: {error,{'emqx@172.31.195.122',{already_exists,'emqx@172.31.195.122'}}}

2024-12-18T14:51:51.111118+08:00 [notice] msg: Starting shards

2024-12-18T14:51:51.111397+08:00 [debug] msg: rlog_schema_init

2024-12-18T14:51:51.184329+08:00 [info] msg: Setting RLOG shard config, tables: ['$mria_rlog_sync',mria_schema], shard: '$mria_meta_shard'

2024-12-18T14:51:51.184600+08:00 [info] msg: Converging schema

2024-12-18T14:51:51.185120+08:00 [info] msg: Setting RLOG shard config, tables: ['$mria_rlog_sync',mria_schema], shard: '$mria_meta_shard'

2024-12-18T14:51:51.185511+08:00 [info] Mria(Membership): Node emqx@172.31.195.121 up

2024-12-18T14:51:51.185838+08:00 [info] msg: starting_rlog_shard, shard: '$mria_meta_shard'

2024-12-18T14:51:51.185973+08:00 [info] msg: state_change, from: disconnected, to: disconnected, shard: '$mria_meta_shard'

2024-12-18T14:51:51.186246+08:00 [debug] msg: rlog_replica_reconnect, node: 'emqx@172.31.195.122', shard: '$mria_meta_shard'

2024-12-18T14:51:51.186506+08:00 [info] msg: Starting ekka

2024-12-18T14:51:51.228643+08:00 [info] ekka_cluster_etcd connect to "172.31.195.121":2379 gun(<0.2539.0>) successed

2024-12-18T14:51:51.270473+08:00 [info] ekka_cluster_etcd connect to "172.31.195.122":2379 gun(<0.2540.0>) successed

2024-12-18T14:51:51.311252+08:00 [info] msg: Ekka is running

2024-12-18T14:51:51.311474+08:00 [notice] msg: (re)starting_emqx_apps

2024-12-18T14:51:51.313127+08:00 [debug] msg: starting_app, app: emqx_conf

2024-12-18T14:51:51.313399+08:00 [debug] msg: mria_mnesia_create_table, name: cluster_rpc_mfa, options: [{type,ordered_set},{rlog_shard,emqx_cluster_rpc_shard},{storage,disc_copies},{record_name,cluster_rpc_mfa},{attributes,[tnx_id,mfa,created_at,initiator]}]

2024-12-18T14:51:52.388384+08:00 [debug] msg: Trying to connect to the core node, node: 'emqx@172.31.195.121'

2024-12-18T14:51:52.388648+08:00 [info] msg: mria_lb_core_discovery_new_nodes, node: 'emqx@172.31.195.122', ignored_nodes: [], previous_cores: [], returned_cores: ['emqx@172.31.195.121']

2024-12-18T14:51:52.390304+08:00 [debug] msg: ensure_local_table, table: cluster_rpc_mfa, '$span': start

2024-12-18T14:51:52.390589+08:00 [info] msg: Setting RLOG shard config, tables: [cluster_rpc_mfa], shard: emqx_cluster_rpc_shard

2024-12-18T14:51:52.390757+08:00 [debug] msg: ensure_local_table, table: cluster_rpc_mfa, '$span': {complete,true}

2024-12-18T14:51:52.390851+08:00 [debug] msg: mria_mnesia_create_table, name: cluster_rpc_commit, options: [{type,set},{rlog_shard,emqx_cluster_rpc_shard},{storage,disc_copies},{record_name,cluster_rpc_commit},{attributes,[node,tnx_id]}]

2024-12-18T14:51:52.391798+08:00 [debug] msg: ensure_local_table, table: cluster_rpc_commit, '$span': start

2024-12-18T14:51:52.392039+08:00 [info] msg: Setting RLOG shard config, tables: [cluster_rpc_commit,cluster_rpc_mfa], shard: emqx_cluster_rpc_shard

2024-12-18T14:51:52.393110+08:00 [debug] msg: ensure_local_table, table: cluster_rpc_commit, '$span': {complete,true}

2024-12-18T14:51:52.393339+08:00 [info] msg: starting_rlog_shard, shard: emqx_cluster_rpc_shard

2024-12-18T14:51:52.393429+08:00 [info] msg: state_change, from: disconnected, to: disconnected, shard: emqx_cluster_rpc_shard

2024-12-18T14:51:52.393633+08:00 [debug] msg: rlog_replica_reconnect, node: 'emqx@172.31.195.122', shard: emqx_cluster_rpc_shard

2024-12-18T14:51:52.399578+08:00 [debug] msg: Connected to the core node, node: 'emqx@172.31.195.121', shard: '$mria_meta_shard', seqno: 447223

2024-12-18T14:51:52.399819+08:00 [info] msg: state_change, from: disconnected, to: bootstrap, shard: '$mria_meta_shard'

2024-12-18T14:51:52.400913+08:00 [debug] msg: ensure_local_table, table: '$mria_rlog_sync', '$span': start

2024-12-18T14:51:52.401066+08:00 [debug] msg: ensure_local_table, table: '$mria_rlog_sync', '$span': {complete,true}

2024-12-18T14:51:52.402216+08:00 [debug] msg: ensure_local_table, table: mria_schema, '$span': start

2024-12-18T14:51:52.402366+08:00 [debug] msg: ensure_local_table, table: mria_schema, '$span': {complete,true}

2024-12-18T14:51:52.403290+08:00 [info] msg: shard_bootstrap_complete

2024-12-18T14:51:52.403416+08:00 [info] msg: Bootstrap of the shard is complete, checkpoint: 1734504712400, shard: '$mria_meta_shard'

2024-12-18T14:51:52.403525+08:00 [info] msg: state_change, from: bootstrap, to: local_replay, shard: '$mria_meta_shard'

2024-12-18T14:51:53.696672+08:00 [debug] msg: Trying to connect to the core node, node: 'emqx@172.31.195.121'

2024-12-18T14:51:53.697827+08:00 [debug] msg: Connected to the core node, node: 'emqx@172.31.195.121', shard: emqx_cluster_rpc_shard, seqno: 13764407

2024-12-18T14:51:53.698013+08:00 [info] msg: state_change, from: disconnected, to: bootstrap, shard: emqx_cluster_rpc_shard

2024-12-18T14:51:53.699024+08:00 [debug] msg: ensure_local_table, table: cluster_rpc_commit, '$span': start

2024-12-18T14:51:53.699185+08:00 [debug] msg: ensure_local_table, table: cluster_rpc_commit, '$span': {complete,true}

2024-12-18T14:51:53.700171+08:00 [debug] msg: ensure_local_table, table: cluster_rpc_mfa, '$span': start

2024-12-18T14:51:53.700306+08:00 [debug] msg: ensure_local_table, table: cluster_rpc_mfa, '$span': {complete,true}

2024-12-18T14:51:53.701272+08:00 [info] msg: shard_bootstrap_complete

2024-12-18T14:51:53.701389+08:00 [info] msg: Bootstrap of the shard is complete, checkpoint: 1734504713698, shard: emqx_cluster_rpc_shard

2024-12-18T14:51:53.701477+08:00 [info] msg: state_change, from: bootstrap, to: local_replay, shard: emqx_cluster_rpc_shard

2024-12-18T14:52:20.878589+08:00 [warning] Mnesia overload: {dump_log,write_threshold}

2024-12-18T14:52:20.878608+08:00 [warning] Mnesia('emqx@172.31.195.122'): ** WARNING ** Mnesia is overloaded: {dump_log,write_threshold}

2024-12-18T14:52:43.845106+08:00 [warning] Mnesia overload: {dump_log,write_threshold}

2024-12-18T14:52:43.845136+08:00 [warning] Mnesia('emqx@172.31.195.122'): ** WARNING ** Mnesia is overloaded: {dump_log,write_threshold}

2024-12-18T14:52:50.854660+08:00 [warning] Mnesia('emqx@172.31.195.122'): ** WARNING ** Mnesia is overloaded: {dump_log,write_threshold}

2024-12-18T14:52:50.854753+08:00 [warning] Mnesia overload: {dump_log,write_threshold}

2024-12-18T14:52:50.953083+08:00 [warning] Mnesia('emqx@172.31.195.122'): ** WARNING ** Mnesia is overloaded: {dump_log,write_threshold}

2024-12-18T14:52:50.953274+08:00 [warning] Mnesia overload: {dump_log,write_threshold}

2024-12-18T14:53:29.775723+08:00 [warning] Mnesia overload: {dump_log,write_threshold}

2024-12-18T14:53:29.775723+08:00 [warning] Mnesia('emqx@172.31.195.122'): ** WARNING ** Mnesia is overloaded: {dump_log,write_threshold}

2024-12-18T14:53:29.904283+08:00 [warning] Mnesia overload: {dump_log,write_threshold}

2024-12-18T14:53:29.904235+08:00 [warning] Mnesia('emqx@172.31.195.122'): ** WARNING ** Mnesia is overloaded: {dump_log,write_threshold}

2024-12-18T14:53:30.024056+08:00 [warning] Mnesia('emqx@172.31.195.122'): ** WARNING ** Mnesia is overloaded: {dump_log,write_threshold}

2024-12-18T14:53:30.024160+08:00 [warning] Mnesia overload: {dump_log,write_threshold}

2024-12-18T14:53:30.133283+08:00 [warning] Mnesia overload: {dump_log,write_threshold}

2024-12-18T14:53:30.133297+08:00 [warning] Mnesia('emqx@172.31.195.122'): ** WARNING ** Mnesia is overloaded: {dump_log,write_threshold}

2024-12-18T14:53:30.246914+08:00 [warning] Mnesia overload: {dump_log,write_threshold}

2024-12-18T14:53:30.246898+08:00 [warning] Mnesia('emqx@172.31.195.122'): ** WARNING ** Mnesia is overloaded: {dump_log,write_threshold}

2024-12-18T14:53:43.183693+08:00 [warning] Mnesia('emqx@172.31.195.122'): ** WARNING ** Mnesia is overloaded: {dump_log,write_threshold}

2024-12-18T14:53:43.183851+08:00 [warning] Mnesia overload: {dump_log,write_threshold}

2024-12-18T14:53:43.777678+08:00 [warning] Mnesia overload: {dump_log,write_threshold}

2024-12-18T14:53:43.777570+08:00 [warning] Mnesia('emqx@172.31.195.122'): ** WARNING ** Mnesia is overloaded: {dump_log,write_threshold}

2024-12-18T14:53:43.860420+08:00 [warning] Mnesia overload: {dump_log,write_threshold}

2024-12-18T14:53:43.860453+08:00 [warning] Mnesia('emqx@172.31.195.122'): ** WARNING ** Mnesia is overloaded: {dump_log,write_threshold}

2024-12-18T14:53:50.802353+08:00 [warning] Mnesia('emqx@172.31.195.122'): ** WARNING ** Mnesia is overloaded: {dump_log,write_threshold}

2024-12-18T14:53:50.802356+08:00 [warning] Mnesia overload: {dump_log,write_threshold}

2024-12-18T14:54:02.008682+08:00 [warning] Mnesia('emqx@172.31.195.122'): ** WARNING ** Mnesia is overloaded: {dump_log,write_threshold}

2024-12-18T14:54:02.008680+08:00 [warning] Mnesia overload: {dump_log,write_threshold}

2024-12-18T14:54:02.107438+08:00 [warning] Mnesia('emqx@172.31.195.122'): ** WARNING ** Mnesia is overloaded: {dump_log,write_threshold}

2024-12-18T14:54:02.107659+08:00 [warning] Mnesia overload: {dump_log,write_threshold}